-

greptimedb

An open-source, cloud-native, distributed time-series database with PromQL/SQL/Python supported. Available on GreptimeCloud.

-

InfluxDB

Power Real-Time Data Analytics at Scale. Get real-time insights from all types of time series data with InfluxDB. Ingest, query, and analyze billions of data points in real-time with unbounded cardinality.

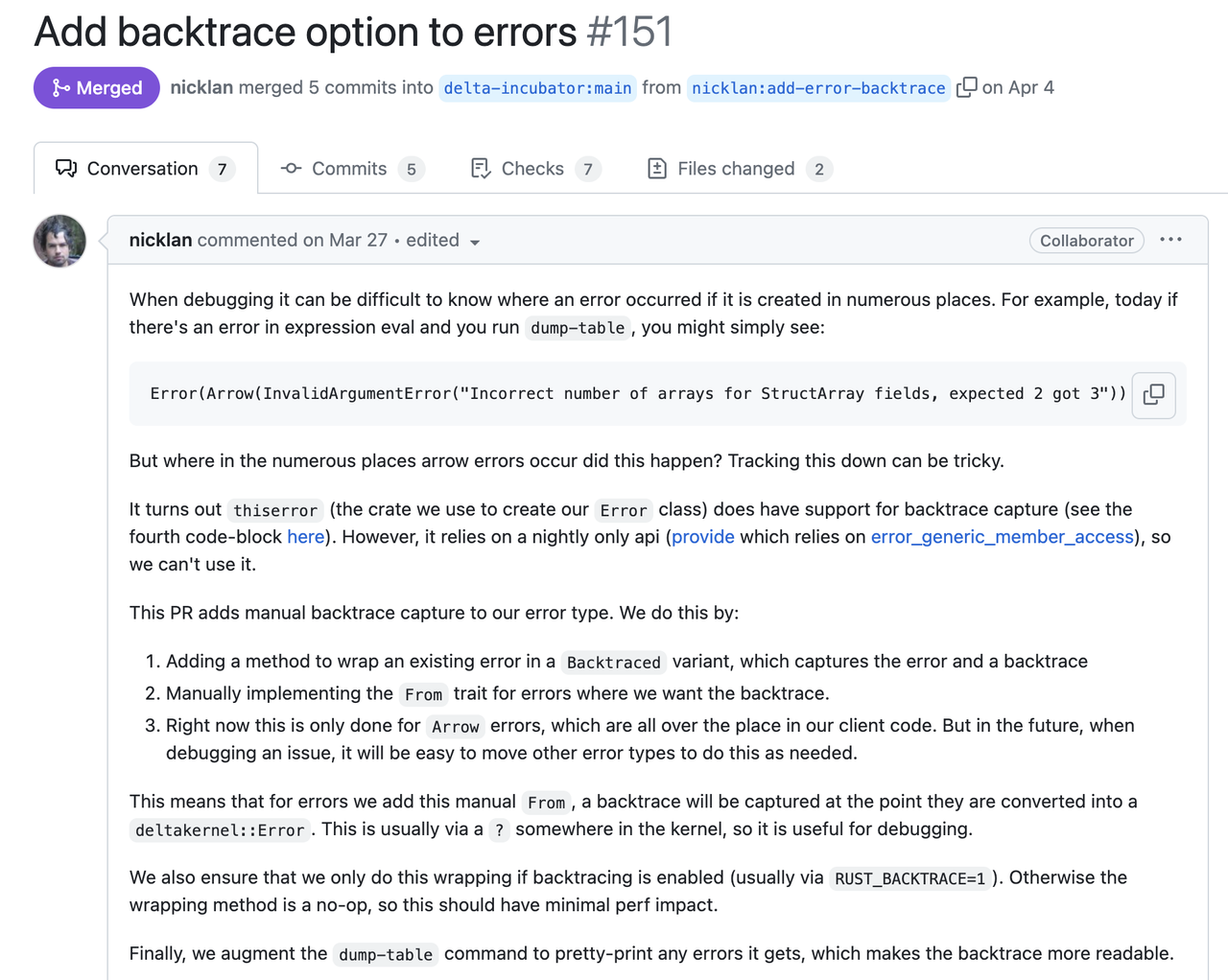

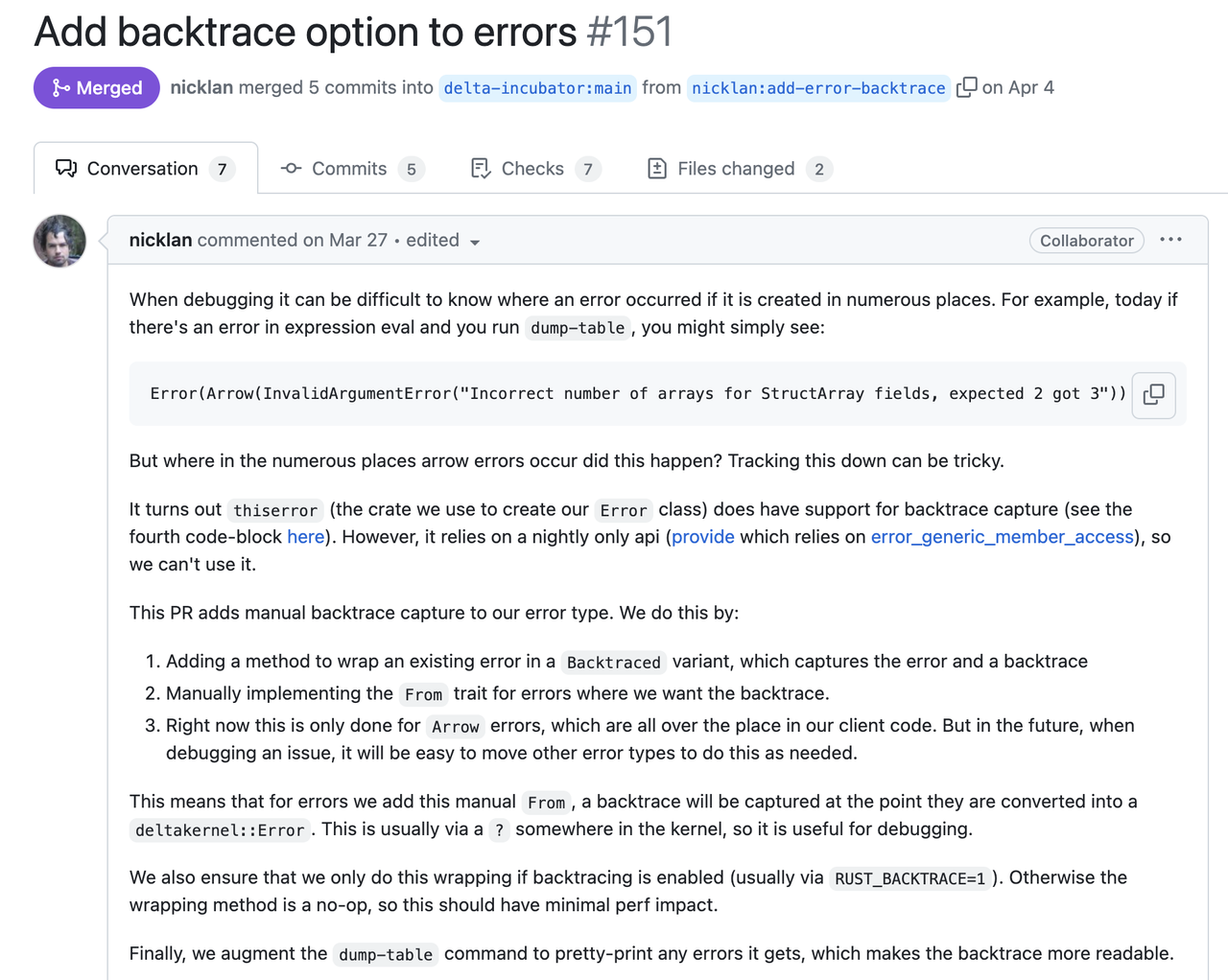

**A good error report is not only about how it gets constructed, but what is more important, to tell what human can understand from its cause and trace. We call it Stacked Error.** It should be intuitive and you must have seen a similar format elsewhere like backtrace. From this log, it's easy to know the entire thing with full context, from the user-facing behavior to the root cause. Plus the exact line and column number of where each error is propagated. You will know that this error is *"from the query "blabla", the fifth package's header is corrupted"*. It's likely to be invalid user input and we may not need to handle it from the server side. This example shows the critical information that an error should contain: - **The root cause** that tells what is happening. - **The full context stack** that can be used in debugging or figuring out where the error occurs. - **What happens from the user's perspective.** Decide whether we need to expose the error to users. The first root cause is often clear in many cases, like the DecodeMessage example above, as long as the library or function we used implements their error type correctly. But only having the root cause can be not enough. Here is another [evidence](https://github.com/delta-incubator/delta-kernel-rs/pull/151) from Delta Lake developed by Databricks:  In the following sections, we will focus on the context stack and the way to present errors. And shows the way we implement it. So hopefully you can reproduce the same practices as in GreptimeDB. ### System Backtrace So, now you have the root cause (`DecodeMessage(serde_json: invalid character at 1)`). But it's not clear at which step this error occurs: when decoding the header, or the body? A intuitive thought is to capture the backtrace. `.unwrap()` is the first choice, where the backtrace will show up when error occurs (of course this is a bad practice). It will give you a complete call stack along with the line number. Such a call stack contains the full trace, including lots of unrelated system stacks, runtime stacks and std stacks. If you'd like to find the call in application code, you have to inspect the source code stack by stack, and skip all the unrelated ones. Nowadays, many libraries also provide the ability to capture backtrace on an `Error` is constructed. Regardless of whether the system backtrace can provide what we truly want, it's very costly on either CPU ([#1261](https://github.com/GreptimeTeam/greptimedb/pull/1261)) and memory ([#1273](https://github.com/GreptimeTeam/greptimedb/pull/1273)). Capturing a backtrace will significantly slow down your program, as it needs to walk through the call stack and translate the pointer. Then, to be able to translate the stack pointer we will need to include a large `debuginfo` in our binary. In GreptimeDB, this means increasing the binary size by >700MB (4x compared to 170MB without debuginfo). And there will be many noises in the captured system backtrace because the system can't distinguish whether the code comes from the standard library, a third-party async runtime or the application code. There is another difference between the system backtrace and the proposed Stacked Error. System backtrace tells us how to get to the position where the error occurs and you cannot control it, while the Stacked Error shows how the error is propagated. Take the following code snippet as an example to examine the difference between system backtrace and virtual stack: ```rust async fn handle_request(req: Request) -> Result { let msg = decode_msg(&req.msg).context(DecodeMessage)?; // propagate error with new stack and context verify_msg(&msg)?; // pass error to the caller directly process_msg(msg).await? // pass error to the caller directly } async fn decode_msg(msg: &RawMessage) -> Result { serde_json::from_slice(&msg).context(SerdeJson) // propagate error with new stack and context }

**A good error report is not only about how it gets constructed, but what is more important, to tell what human can understand from its cause and trace. We call it Stacked Error.** It should be intuitive and you must have seen a similar format elsewhere like backtrace. From this log, it's easy to know the entire thing with full context, from the user-facing behavior to the root cause. Plus the exact line and column number of where each error is propagated. You will know that this error is *"from the query "blabla", the fifth package's header is corrupted"*. It's likely to be invalid user input and we may not need to handle it from the server side. This example shows the critical information that an error should contain: - **The root cause** that tells what is happening. - **The full context stack** that can be used in debugging or figuring out where the error occurs. - **What happens from the user's perspective.** Decide whether we need to expose the error to users. The first root cause is often clear in many cases, like the DecodeMessage example above, as long as the library or function we used implements their error type correctly. But only having the root cause can be not enough. Here is another [evidence](https://github.com/delta-incubator/delta-kernel-rs/pull/151) from Delta Lake developed by Databricks:  In the following sections, we will focus on the context stack and the way to present errors. And shows the way we implement it. So hopefully you can reproduce the same practices as in GreptimeDB. ### System Backtrace So, now you have the root cause (`DecodeMessage(serde_json: invalid character at 1)`). But it's not clear at which step this error occurs: when decoding the header, or the body? A intuitive thought is to capture the backtrace. `.unwrap()` is the first choice, where the backtrace will show up when error occurs (of course this is a bad practice). It will give you a complete call stack along with the line number. Such a call stack contains the full trace, including lots of unrelated system stacks, runtime stacks and std stacks. If you'd like to find the call in application code, you have to inspect the source code stack by stack, and skip all the unrelated ones. Nowadays, many libraries also provide the ability to capture backtrace on an `Error` is constructed. Regardless of whether the system backtrace can provide what we truly want, it's very costly on either CPU ([#1261](https://github.com/GreptimeTeam/greptimedb/pull/1261)) and memory ([#1273](https://github.com/GreptimeTeam/greptimedb/pull/1273)). Capturing a backtrace will significantly slow down your program, as it needs to walk through the call stack and translate the pointer. Then, to be able to translate the stack pointer we will need to include a large `debuginfo` in our binary. In GreptimeDB, this means increasing the binary size by >700MB (4x compared to 170MB without debuginfo). And there will be many noises in the captured system backtrace because the system can't distinguish whether the code comes from the standard library, a third-party async runtime or the application code. There is another difference between the system backtrace and the proposed Stacked Error. System backtrace tells us how to get to the position where the error occurs and you cannot control it, while the Stacked Error shows how the error is propagated. Take the following code snippet as an example to examine the difference between system backtrace and virtual stack: ```rust async fn handle_request(req: Request) -> Result { let msg = decode_msg(&req.msg).context(DecodeMessage)?; // propagate error with new stack and context verify_msg(&msg)?; // pass error to the caller directly process_msg(msg).await? // pass error to the caller directly } async fn decode_msg(msg: &RawMessage) -> Result { serde_json::from_slice(&msg).context(SerdeJson) // propagate error with new stack and context }